HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

Below is an archived edition of Ctrl Alt Right Delete, a weekly email newsletter. This edition was published on 06/25/2017. Members of Factual Democracy Project have access to past editions. Subscribe to Ctrl Alt Right Delete.

I’ve been working with machine learning techniques to identify several varieties of “troll” Twitter accounts that spread propaganda and false information. This material is largely of Russian origin and targeted at right-wing American voters. I organized the Twitter accounts into one of three categories:

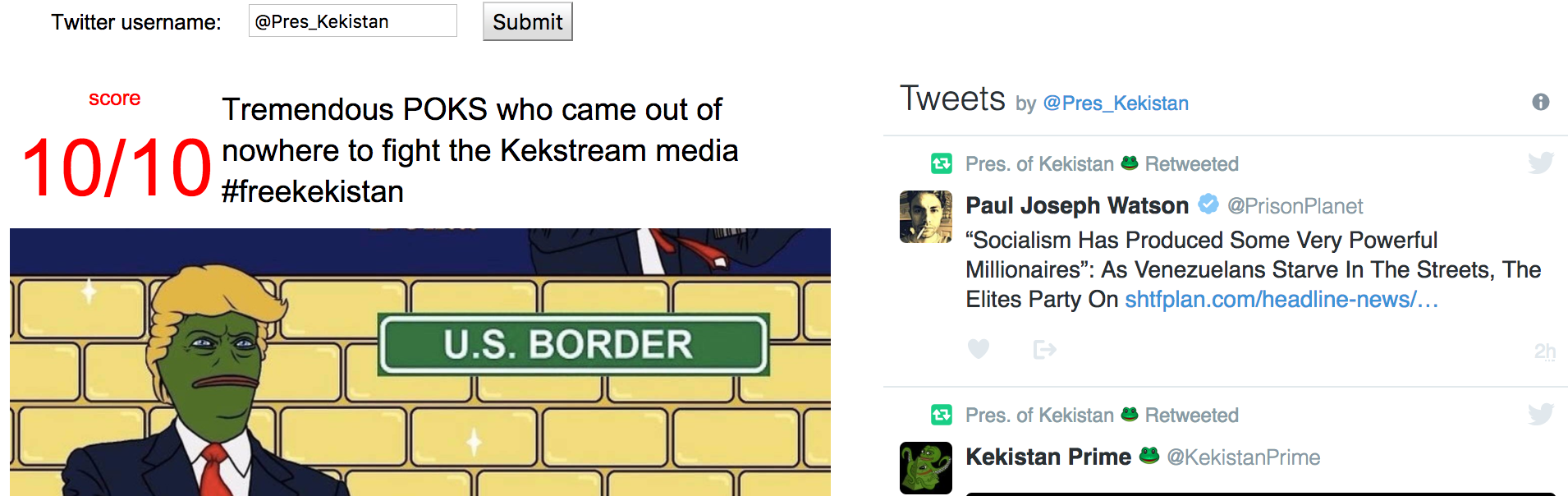

I’ve developed a simple website Make Adverbs Great Again demonstrating this technique. You can enter a Twitter username and receive a score indicating how similar the system thinks the account in question is to known troll accounts.

The particular machine learning technique used here is a type of neural network called a multilayer perceptron (MLP). For the technically inclined, I wrote this in Python and used Scikit’s implementation of the MLP neural network.

This type of system is “trained” using a set of known examples. For this project, I identified roughly 3000 safe and 1000 troll accounts to use as the training set. The safe accounts were drawn from a variety of sources – some are political, some promotional, some purely social. The troll accounts were identified by searching Twitter for specific hashtag associated with known false stories (#PizzaGate, #SethRich, #SickHillary, etc), and selecting a cross-section of accounts that included both bots and human users. All the sample troll accounts used were strongly focused on propagating these stories, and additionally had tweeted multiple links to state-sponsored Russian media sites such as RT or Sputnik.

There’s a bit of an element of chance to the training process, and the accuracy of the resulting networks varies somewhat. I trained 100,000 neural networks and kept the best ten in terms of accuracy. The resulting networks correctly classify accounts with 95% accuracy, with a larger share of the errors being false negatives (troll accounts that were missed) than false positives (accounts incorrectly classified as trolls). Each individual network makes a yes or no decision as to whether a given account is a troll, and the score shown on the website is the number of the networks (out of ten) that voted “yes”. It’s worth noting that it’s possible for all ten to be wrong, and even the 10/10 scores are occasionally false positives.

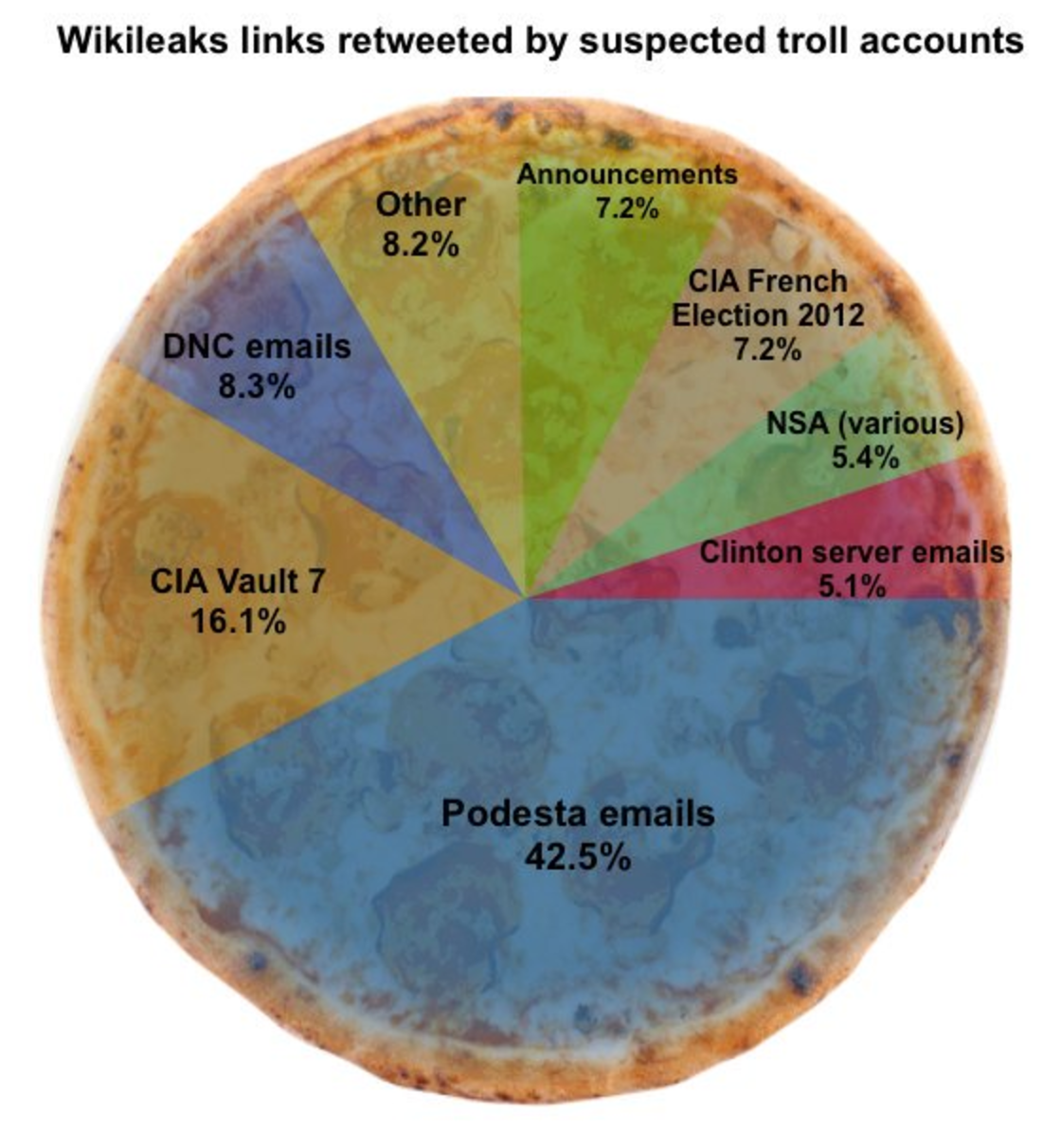

In terms of future work, there are a number of additional avenues worth exploring. There are several ways of improving the accuracy further, ranging from improving the training data to using more complex classification techniques. The more interesting avenue for me from a research perspective, however, has been to use the classifier to identify large numbers of potential troll accounts for additional study. I’ve used it as a source of data for several bulk analysis projects. This pie chart showing the breakdown of Wikileaks links shared by bots and trolls, for example, was produced using a sample set of over 14000 accounts identified in this way. Additionally, these techniques could likely be generalized and adapted to other social media platforms, such as Facebook or Reddit.

If you’re interested in working with this yourself, you can download the source code for the classifier here. You’ll need to sign up for a Twitter API key (free) and provide your own sets of example “safe” and “troll” accounts in order to make use of it.

If you’re interested in working with this yourself, you can download the source code for the classifier here. You’ll need to sign up for a Twitter API key (free) and provide your own sets of example “safe” and “troll” accounts in order to make use of it.

Conspirador Norteño is an activist and the creator of Make Adverbs Great Again.

This is worth spending some time on. Researchers at the Oxford Internet Institute, University of Oxford, have put together the most comprehensive study on computational propaganda (i.e how bots and trolls are deployed on social media for the purpose of manipulating us.) that I’ve seen. The report includes extensive case studies from the United States and eight other countries: China, Russia, Poland, Brazil, Canada, Germany, Ukraine, and Taiwan.

Four key quotes from the Executive Summary:

As I’ve said before, the Frog Squad are organized as a global movement against globalism. These case studies are a sobering reminder not only of what we’re up against but also gives us a good look at their artillery. The good news is that we’re also learning a good deal about their tactics.

Want even more links? Be sure to like the Ctrl Alt Right Delete Facebook page. I post articles there all week. A lot of what doesn’t make it here will get posted over there.

Have an idea for Ctrl Alt Right Delete? I’m always on the lookout for more voices. You can pitch me by replying to this email.

Thanks as always to the amazing Nicole Belle for editing!

Thanks as always to the amazing Nicole Belle for editing!

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…