HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

Below is an archived edition of Ctrl Alt Right Delete, a weekly email newsletter. This edition was published on 10/01/2017. Members of Factual Democracy Project have access to past editions. Subscribe to Ctrl Alt Right Delete.

By Greg Greene

I think a ton these days about our digital environment — and I use that phrasing with purpose.

I think a ton these days about our digital environment — and I use that phrasing with purpose.

Just as we depend on the climate and biosphere that surround us, we’ve been enveloped by an array of digital services — spaces where we work, entertain ourselves, gather information, and where information is gathered on us. And without much fanfare, our society has grown dependent on those spaces’ health.

When we talk of the natural environment, we know how to explain mankind’s effect on it. If storms wreak havoc across the Caribbean and the Gulf, we can say greenhouse gases trapped more of the heat that fuels such hurricanes. When Volkswagen rigs diesel cars to cheat in emissions tests, we can measure the smog and respiratory ailments that resulted. When we examine the Flint water crisis, we can see how lead intoxication caused a dip in local fertility rates.

In each of those cases, by taking a closer look, one can spot an externality: a cost imposed on people who chose to bear it.

In Flint, the state of Michigan sent lead pollution soaring when it switched the debt-saddled city to a cheaper water source. Volkswagen blew off its obligation to make diesel engines burn cleanly — which let the company sell cars at lower prices, but polluted the skies. With the climate … well, we all bear the cost of advanced economies’ fossil-fuel dependence.

I’ve said a lot now, so here’s my point: consider some of the dystopian stories in headlines these days. Aren’t many of them, at bottom, tales of externalities — ones in the digital environment, but externalities nonetheless?

Take this example: when internet-connected devices sold with flimsy password protection — if any at all — get conscripted into a botnet capable of crippling the internet, all of us bear the cost of gadget makers’ lack of attention to-or interest in-investing in, digital security.

If, to get whimsical, a credit bureau left a server vulnerability unpatched and lost personal identifying information on 143 million people, that company deserves blame for shrugging off the expense of protecting your data — and passing the cost along to you.

Now: Facebook and Google are big companies — titans in our digital environment. (Quick: imagine living wholly aloof from either of them.)

Over time, they’re refined algorithms that have helped them rank among Americans’ key sources of information. In the process they’ve earned massive profits, due in part to business models premised on the exercise of little to no editorial oversight. (Facebook famously fired the team responsible for keeping fake news out of its trending topics box — with predictable results.)

Let’s zero in on Facebook. Credit where due: the company has, as Mark Zuckerberg wrote this week, “[brought] people together and buil[t] a community for everyone.” That’s fine … as far as it goes.

But Facebook has also made it simpler for misinformation and fake news to slosh into minds around the globe. As UNC professor Zeynep Tufekci put it in The New York Times, “[T]he unfortunate truth is that by design, business model and algorithm, Facebook has made it easy for it to be weaponized to spread misinformation and fraudulent content.”

This situation, at its core, amounts to an externality.

Democratic societies rely on accurate news; voting publics and elected officials alike use information, preferably the well-grounded kind, to make decisions. Facebook, as it grew into a behemoth, shrugged off the labor-intensive task of vetting information for accuracy, and passed the cost of ferreting out fake news in our digital environment to … well, us.

And some of us aren’t handling it so well.

Churchill once said: “We shape our buildings, and afterwards our buildings shape us.” The same holds true for the architecture of algorithms that shape our digital environment — and we increasingly live in that digital environment. Attention must, or ought to, be paid.

Put me down with coder Maciej Cegłowski: “Together with climate change,” he says, “[the] algorithmic takeover of the public sphere is the biggest news story of the early 21st century.”

More thoughts on how our societies should come to grips with that reality in a future newsletter.

Greg Greene has worked as a Washington-based digital communications strategist for a decade and a half. You can find him on Twitter at @GGreeneVa.

![]() Factual Democracy Project, just released our first poll via Public Policy Polling. It shows that Americans remain surprisingly aware — and strongly disapprove — of how the platform enabled Russians to use paid media and fake news to influence American voters in the most recent election.

Factual Democracy Project, just released our first poll via Public Policy Polling. It shows that Americans remain surprisingly aware — and strongly disapprove — of how the platform enabled Russians to use paid media and fake news to influence American voters in the most recent election.

We learned two important things from this poll. First, there’s broad bipartisan agreement that Russia shouldn’t be allowed to buy political ads targeted at American voters. There’s also bipartisan agreement that Facebook should hold itself to the same standard as a media company and not allow fake news to be published and shared on their platform. Facebook is one of the world’s most profitable companies. The public understands that and expects it to protect our democracy by fighting back against disinformation campaigns that target American voters.

The poll of 865 registered voters was conducted Sept. 22-25 by Public Policy Polling. The margin of error is 3.3 percent.

Read more about the poll at McClatchy.

Want even more links? Be sure to like the Ctrl Alt Right Delete Facebook page. I post articles there all week. A lot of what doesn’t make it here will get posted over there.

That’s all for this week. Thanks, as always, to Nicole Belle for copy editing.

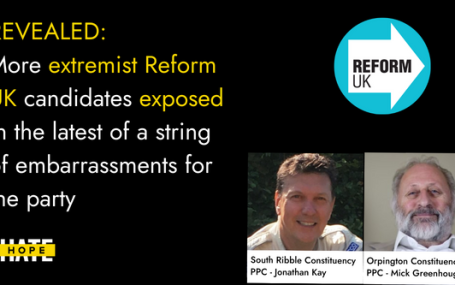

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…