HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

In last week’s newsletter I touched on the disinformation and conspiracy theories that always occur after a mass shooting. It happens every time, immediately as the news breaks. The goal isn’t to push a single narrative but to pollute the conversation so badly that people don’t know what to believe whether you’re a reporter or just someone checking social media to see what’s happening.

This shooting was different in that the student survivors began speaking out immediately, advocating for themselves and planning direct actions. Hailing from a generation that’s been raised on social media, these young people knew how to get their message out online. In doing so they became a target for the trolls and conspiracy theorists.

Media Matters (where I’m a visiting fellow) has been tracking the conspiracy garbage being flung at the students since last Friday. In addition to more than 30 examples, Media Matters also highlighted how tech companies failed to protect the students. To be clear they failed to protect minors in a completely predictable scenario. Days later Twitter Safety assured users they were using every tool in their toolbox to protect the Parkland survivors and indicated that the #TwitterLockout the Frog Squad was freaking out about was related to that. YouTube had to pull down a conspiracy video about one of the students that had become the number one trending video on the platform. A spokesperson for Facebook said that they were “aware” of the posts related to the Florida shooting conspiracies, but said that the company doesn’t “have policies in place that require people to tell the truth.”

By the time tech companies stepped in it was too late. Parkland conspiracy theories had reached the traditional media and survivor Rachel Catania was asked on live television to confirm that she was not a crisis actor or a plant.

This is what’s been weighing on my mind as I consider Parkland conspiracies and the latest Mueller indictments from last week. Everyday I’m asked what people can do to protect themselves online from fake news, doxxing, harassment etc. The truth is there isn’t much individuals can do. Pressuring the tech companies to protect consumers, pressuring our government to better regulate the tech industry is the most meaningful course of action.

These are systemic issues. Hostile actors both foreign and domestic have weaponized social media against us. Little is being done to stop them. They’re exploiting our cultural weaknesses masterfully, and frankly America gives them plenty of material to work with. Taking back Congress in 2018, taking back the White House in 2020 aren’t going to be enough. We live in a country where activists on the left and right unknowingly work(ed) with Russian operatives to sow chaos and discord — they participated in an attack on America. Where the best case scenario is that our President is an unknowing useful idiot but could prove to be an active participant in colluding with a foreign power. There are no short term fixes.

Despite all of this the Parkland survivors give me hope. As Charlie Warzel pointed out in Buzzfeed:

The pro-Trump media will no doubt continue its onslaught. And because the online ecosystems that undergird all of these interactions are deeply broken, the assault against David Hogg and his classmates will likely continue to spread across platforms like YouTube and Facebook and Twitter. But unlike the pro-Trump media’s usual enemies, the Parkland students innately understand how to use this broken system to their advantage. They know intuitively what the pro-Trump media has known (and used to its benefit) for years now: The way to win an information war is not to shy away from conflict online, but to lean into it.

America failed to keep Parkland survivors safe first at school and then as they became the center of a media firestorm, but they are clearly more prepared to handle it than the generation before them. May we all learn from their example.

Fake News, the Alt-Right and Florida

It’s important to understand how the far right use fake news before we can effectively combat it.

By HOPE not hate’s Right Response Team

While repugnant, shows of support or veneration of the murderer Nikolas Cruz by the alt-right in the wake of an attack are just one element of the alt-right’s response. Within moments of the reports of the tragic events hitting the news, the alt-right sprang into action, manipulating facts, spreading rumors and formulating and disseminating fake news.

The events in Florida last week were tragic and we are right to be angry and upset with those on the far right who seek to destroy the truth around such events for their own political gain. Yet anger and outrage will not be enough and if we are to effectively fight back against the prejudiced purveyors of hateful fake news, we need to do much more work to understand how it works, how it spreads and what impact it has.

That’s why HOPE not hate has designed cutting edge software to track the formation and dissemination of far-right fake news, giving us new insights into how it spreads and how we can intervene earlier than ever before.

Find out more about how the far-right make and spread fake news and it’s possibly dangerous effects. HERE

‘The Year in Hate’ from the Southern Poverty Law Center

The SPLC have published their yearly review of the state of hate in America. The report shows that Trump buoyed white supremacists in 2017, sparking backlash among black nationalist groups. According to the SPLC there are now 954 hate groups operating in the U.S..

‘Dead Reckoning’ from Data and Society

Dead Reckoning, a new report by Data and Society, clarifies current uses of “fake news” and analyzes four specific strategies of intervention. It analyzes nascent solutions recently proposed by platform corporations, governments, news media industry coalitions, and civil society organizations. Then, the authors explicate potential approaches to containing “fake news” including trust and verification, disrupting economic incentives, de-prioritizing content and banning accounts, as well as limited regulatory approaches.

Are bots a danger for political election campaigns?

Scientists at Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU) have investigated the extent to which such autonomous programmes were used on the platform Twitter during the general elections in Japan in 2014. By using methods taken from corpus linguistics, they were able to draw up a case study on the activity patterns of social bots. At the same time, the FAU researchers gained an insight into how computer programmes like these were used, and recognised that nationalistic tendencies had an important role to play in the election, especially in social media.

“Alt-Lite” Bloggers and the Conservative Ecosystem

“This research paper examines the important role that “alt-lite” bloggers play in promoting, amplifying, and fortifying Donald Trump’s anti-establishment message to his conservative supporters.”

Like Ctrl Alt Right Delete? Help us spread the word by forwarding it to a friend. Did someone forward you this email? You can subscribe here.

This week was rough. You’ve earned two GIF’s. Talk to you next week.

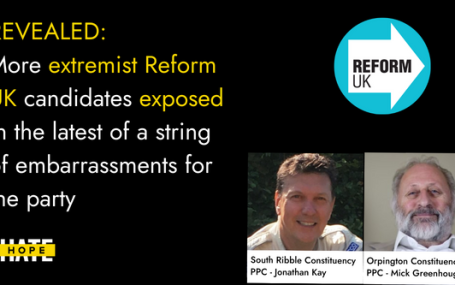

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…