HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

By Melissa Ryan

This week’s newsletter is focused on deplatforming. We’ve been planning it for weeks but as often happens with our thematic issues the timing couldn’t be more apt. Following a week where Facebook and YouTube made clear that politicians won’t be held to the same standards as the rest of us on their platforms, President Trump has repeatedly used his Twitter account this week to attack and call for the outing of the Ukraine Whistleblower, threaten a civil war from his base, and suggest that the House’s Impeachment Inquiry was actually a coup.

Trump’s language seems intended to incite violence and patience is starting to wear thin. Senator and Presidential Candidate Kamala Harris has called on Twitter to suspend President Trump’s account. So has Recode founder and New York Times columnist Kara Swisher, whose column calling for Trump’s suspension also offers some hypothetical situations about what will happen once Trump is no longer in office worth considering.

Attempting to define what deplatforming is and isn’t can be a challenge. Merriam-Webster hasn’t yet added deplatforming to their dictionary but has defined it for an article about emerging words: “Refers to the attempt to boycott a group or individual through removing the platforms (such as speaking venues or websites) used to share information or ideas” (with a note about how the term is still fluid and the definition might change).

Deplatforming as a concept can make people nervous, even friends and allies on the left. I think part of the reason for this is that conversations around deplatforming tend to center on the deplatformed figures rather than the users who have been harmed by them. There are a few exceptions to this, mostly after high-profile harassment cases such as when Milo Yiannopoulos’ racist and misogynist harassment of actress Leslie Jones drove her off of Twitter, or after a domestic terrorist incident where the perpetrator was radicalized online like the Christchurch or El Paso mass shootings.

But far-right figures and communities aren’t deplatformed for their expression. They get deplatformed because they’re harming the rest of us either through targeted harassment, spreading hate, or manipulating social media. And for the most part, they only get deplatformed when tech platforms feel enough public pressure to do it. For the past three years, tech companies have had to be shamed into changing their content moderation policies. Then they’ve had to be shamed again into enforcing them. The pressure to remove content and deplatform repeat offenders has come from governments, journalists, researchers and advocates and it often still isn’t enough to move them doing the right thing and protecting the vast majority of their users.

For all the naysaying (and there’s a lot of it!) anecdotal evidence suggests that deplatforming is an effective tactic for curbing the spread of hate, harm, and harassment online. Can you remember the last time 8chan made the news? Milo Yiannopoulos? Alex Jones?

Deplatformed figures and communities can’t spread hate, they can’t drive media narrative, they can’t make a profit, and crucially they can’t radicalize others and grow their audiences. Joan Donovan, speaking to Vice last year, put it this way: “Generally the falloff is pretty significant and they don’t gain the same amplification power they had prior to the moment they were taken off these bigger platforms.” Deplatforming works.

Share this on TwitterBy Joe Mulhall, HOPE not hate Senior Researcher

Ever since major social media platforms became ubiquitous in modern society debates about their obligation to remove hate speech and hateful individuals has raged, and these debates are, of course, complex. Yet, the last decade has seen far-right extremists attract audiences unthinkable for most of the postwar period, and the damage has been seen on our streets, in the polls, and in the rising death toll from far-right terrorists.

Deplatforming is not straightforward, but it limits the reach of online hate, and social media companies have to do more and do more now. Read why here.

Want even more links? Support us on Patreon to receive a second ICYMI post just for CARD members.

Got feedback or questions? We love hearing from our readers. Reply directly to this email with your comments. I also love links to articles and pieces of research that we might have missed.

I read every email and respond to most. You can also hit us up on Twitter: @melissaryan, @simonmurdochhnh, and @hopenothate.

Consider supporting Ctrl Alt-Right Delete as a paying member via our Patreon page. Members receive additional content including a bonus post of ICYMI links as well as rewards by tier.

That’s a wrap!

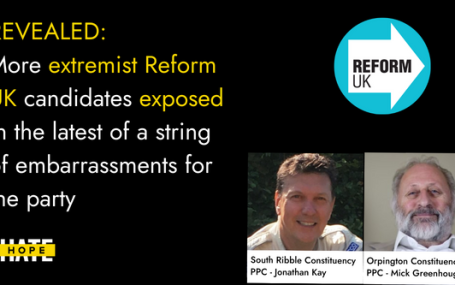

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…