HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

Online Islamophobia remains an ingrained issue when it comes to tackling hate in the UK. The police recorded 89 online anti-Muslim hate crimes in 2018,…

Online Islamophobia remains an ingrained issue when it comes to tackling hate in the UK. The police recorded 89 online anti-Muslim hate crimes in 2018, while monitoring group Tell MAMA recorded 327 verified online anti-Muslim incidents in 2018, down 10% from 2017, though still five percent up compared to 2016 figures. Explaining the discrepancy with police figures, Tell MAMA noted that as it was “primarily a victim support agency” it “more often deal(s) with cases of discrimination, hate speech, and anti-Muslim literature than the police, as these may not warrant criminal convictions”. (The Home Office October 2019 hate crime report also highlighted that police statistics on online offences were “likely to have been an underestimate”.) Such breadth is highlighted below, where we examine the spread of violent anti-Muslim ideas and disinformation, as well as the online ecosystems in which these grow.

Official figures for 2019 are currently unavailable, but Tell MAMA recorded a spike of almost 593% in the UK following the Christchurch terror attack, which included online threats to Muslims made in relation to the attack.

Christchurch underlined how interconnected online and offline anti-Muslim hatred is today; indeed, it emphasised the need for recognising the increasing porousness of the online/offline distinction. Reviewing the attacker’s manifesto, HOPE not hate’s Patrik Hermansson noted that following the attack the numerous ideologies referenced and language used indicate clearly that the attacker had developed his world view online. The attacker wrote that “you will not find the truth anywhere else” other than the internet. Furthermore, as Patrik commented, it’s important to understand that far-right terror doesn’t end when the last bullet has been fired. The ensuing media coverage and virally-replicated memes are often part of a perpetrator’s plan to sow division and hatred. Such intention also appears to arise in efforts to radicalise. Patrik highlighted that along with the manifesto, the far-right keywords graffitied onto the killer’s weapons functioned as gateways to extreme online environments, able to be picked up by viewers in the livestream of the attack.

Christchurch also typified the densely transnational nature of online Islamophobia and hate more generally today. As Tell MAMA noted in their report: “Although we verify reports of online anti-Muslim hatred to ensure only those based in the UK are recorded in our research, globally trending debates and news stories can motivate and spark trends in anti-Muslim rhetoric”.

Research from Cardiff University’s HateLab published in July 2019 broke ground on developing an understanding of these trends. It indicated “a consistent positive association between Twitter hate speech targeting race and religion and offline racially and religiously aggravated offences in London,” suggesting that “online hate victimisation is part of a wider process of harm that can begin on social media and then migrate to the physical world.”

Transnational interaction continues to play a role in the spreading of Islamophobia online more generally, beyond the extremes detailed above. Online forums and image boards populated by the international far right coordinate Islamophobic social media campaigns and spread wholly fabricated messages across social media.

One example highlighted from HOPE not hate’s research regards the image of a woman in a headscarf on the day of the Westminster attack, in March 2017. The picture shows a Muslim woman walking with a phone in her hand, past a group of people aiding one of the other victims of the attack. It gained significant attention after a Twitter user called @Southlonestar claimed that the woman was indifferent to the suffering of others and that this was generally true for all Muslims. That the woman was indifferent was not true (this has been refuted by both the photographer and the woman herself). Other pictures in the series show her noticeably distraught by what she has just witnessed.

In fact, @Southlonestar was one of the approximately 2,700 accounts that Twitter identified as being part of a Russian-sponsored influence operation in November 2017. Along with spreading Islamophobic hate, it also spread messages before the US presidential election and was one of the accounts that tweeted pro-Brexit messages on the day of the EU referendum in June 2016. Regardless of the circumstances behind the picture, it was quickly shared by several major far-right and Islamophobic accounts on Twitter, including those of alt-right leader Richard Spencer and Islamophobe Pamela Geller.

However, this image of the Muslim woman would soon be used for even more nefarious purposes. The same evening it was shared, the picture was appropriated by users on the /pol/ board on the online forum 4chan. One user posted a picture where the woman was superimposed into another setting, with the simple comment next to it “you know what to do”, meaning that he wanted his fellow users to create images superimposing the woman into other settings.

In the comments that followed were hundreds of variations of the posted image, most situating the woman next to various kinds of atrocities. Clearly inspired by the original post or its derivatives, the pictures aimed to send the message that the woman (and Muslims overall) were unmoved by the suffering of others – or even enjoyed it. Many of the doctored images were extreme and obvious parodies and did not leave the forum. In one she is seen walking past what looks like a Nazi extermination camp in Germany. However, importantly some did not stay on 4chan. Two weeks later a manipulated image was spread on social media in Sweden, after four people were murdered by a car in a terrorist attack in Stockholm. The image showed a paramedic walking between what looked like covered bodies while in the background the familiar silhouette of the woman on Westminster has also highlighted how Facebook’s communal and private dimensions are playing a role in catalysing Islamophobia and hate more broadly.

Facebook groups in particular are built such that they can lead to both a widening and a deepening of an individual’s prejudiced politics through content shared by other members within that group (so too on pages). Far-right activists have, in other online contexts, actively attempted to lead individuals they perceive to be susceptible to far-right ideas into the movement or encouraged people who are engaged in more mainstream or moderate far-right politics to take up more extreme positions. One example is the case of the commenting platform Disqus, which provides comment functionality to many large news sites. There have been coordinated attempts by far-right activists to engage in comments fields on mainstream news sites and alternative news outlets such as Breitbart News as a way to propagate far-right ideas to the readership of these sites.

Far-right users accessed articles and engaged in conversations in the comments fields in order to link to more extreme positions related to the topic of the article or use it to argue for other far-right positions. The goal was to take those who agree with more mainstream far-right ideas or have specific grievances around, for example, immigration and move them deeper into the far right or add more extreme interpretations of a certain issue. The tactic could, for example, be used to connect issues like immigration to antisemitic and anti-Muslim conspiratorial ideas.

The danger of this practice is that when an individual joins a Facebook group reflecting a specific interest or anger that they have, they can be set on a path to consume wider far-right material they otherwise would not have come across. Single issue engagement towards, for example, the construction of a new mosque in their area, can via Facebook groups introduce them to other Islamophobic ideas, and wider far-right propaganda on other topics. This can contribute to the adoption of more extreme views and broaden their prejudice from a single issue or few issues to a more fully formed far-right worldview. Importantly, in the case of Facebook groups, the effect of being introduced to new far-right positions needs to be seen in the context of the relationship between members in a group. That they have joined a group, sometimes a relatively small one, generally indicates a level of support for similar ideas. As such, there is a risk that a perceived sense of commonality and agreement can make members more trusting and receptive towards content posted by other members in the group. This could further exacerbate the risk of the broadening and/or deepening of their far-right engagement.

Illustrative of this is Yellow Vests UK, a closed group which presents itself as a British counterpart to the original French Gilets Jaunes. It has approximately 1,400 members at the time of writing. Like the original movement, at least superficially, the group is centered on anti-government and populist sentiments about the UK government not representing the “will of the people” and that it has “contempt for the working class.” A vast majority of posts in the group reflect a feeling of betrayal from UK politicians towards the electorate and highlight the incompetency as well as supposed corruption of UK politicians and especially, though not exclusively, the Labour Party. Content about British people not getting the support they need from the social security system are also common and similarly used to highlight the failure of the state. Smaller topics include occasional anti-immigration posts and posts romanticising British history, such as quotes by Winston Churchill and references to the Magna Carta. Despite this, following its creation in December 2018, the group has exhibited frequent Islamophobia, often demonstrating a slide into more explicitly racial nationalist politics. A user in May 2019, for example, posted the following making reference to the ‘indigenous British’:

And replies included a further change of language to “white British”:

The group has also seen a broadening of far-right views, from anti-LGBT+ views to posts about the ‘Kalergi Plan’ far-right conspiracy theory. This alleges that there is a deliberate plan to undermine white European society by a campaign of mass immigration, integration and miscegenation conducted by sinister (and often Jewish) elites.

The above areas of developing online Islamophobia in the UK (and internationally) do not always occur in isolation. Illustrating misinforming through images and the dynamics of Facebook groups, is an example similar to that given above of the woman passing the site of the London Bridge terror attacks. The use of photos stripped of context to incite anger and hatred against Muslims can be seen in Facebook groups that do not have Islamophobia as their apparent purpose. In the ‘Jacob Rees-Mogg: Supporters’ Group’, a public Facebook group with over 24,000 members that was ostensibly set up to promote the Conservative MP, a photo of Muslims praying in the road on London Bridge was posted with a caption that implied the men were choosing to pray illegally in the middle of a road and stopping traffic by doing so.

The post prompted an avalanche of vicious rhetoric from the group’s members, including dozens of violent fantasies of running the men over, spraying them with pig faeces or throwing them off the bridge. Even if it were true that the men were illegally blocking a road for their prayers, this level of vitriol would be extreme. Yet the missing context – perhaps deliberately obscured by the persons sharing the image – was that the road had already been closed by a minicab drivers strike. Both lanes were blocked by minicab drivers – of every religion and none – who had parked up their cars in protest against the congestion charge. Those drivers who happened to be Muslim were simply observing their prayers while doing so.

As with the Gilets Jaunes group, there are many reasons that someone might choose to join the ‘Jacob Rees-Mogg: Supporters’ Group’ – support for his stance on Brexit, admiration for his character, or out of curiosity. But the lax moderation policy of the group’s admins – some of whom have posted anti-Muslim material themselves – means that whatever their initial reasons for joining the group, they will subsequently be exposed to fake news, conspiracy theories and violently anti-Muslim rhetoric. Those who are strongly opposed to bigotry might immediately choose to leave the group, but those whose views on diversity and equality are less certain will find a steady drip-feed of weaponised far-right propaganda in their Facebook newsfeed from that point onwards. The effects of such exposure are hard to measure, but it is something that social media companies must urgently consider when evaluating their impact on our communities. With means of propagating Islamophobia online continuing to coalesce in online spaces in the UK and abroad, we must work harder to stem its spread.

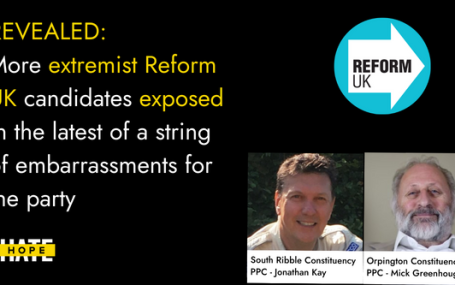

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…